Happy to share with you all my new article on my first terraform script…. | It is indeed infrastructure as a code (IAC).

I wanted to create Azure Key Vault with soft delete option using Terraform. Soft delete option is the recommended practice for key vault as it enables us to recover from any delete operation on the key vault for with in 90 days.

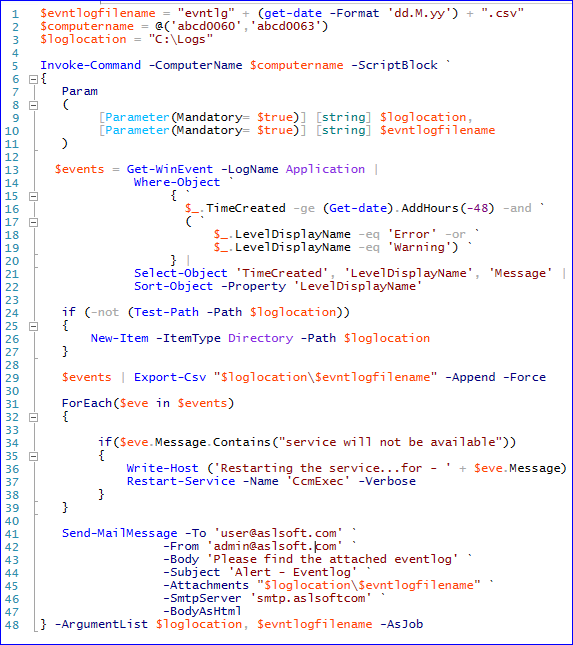

I have copied my code here –

Important note here is that Terraform do not have out of the box command to enable soft delete and so to implement it, I used the “local-exec” option to run the PowerShell command. What it will do is that it will update the created key vault from the steps above it for the soft delete by running the PowerShell command from the local execution.

Code –

Main.tf – Main file that hold all the code that gets executed.

provider “azurerm” {

alias=“subscription_dev”

tenant_id=“[TENANT ID]”

subscription_id=“[SUBS ID]”

client_id=“[CLIENT ID]”

client_secret=var.client_secret

}

resource “azurerm_resource_group” “rg” {

name = var.resourcegroupname

location = var.location

provider = azurerm.subscription_dev

}

resource “azurerm_key_vault” “kvpoc” {

name=var.kvname

location=var.location

resource_group_name=var.resourcegroupname

tenant_id=var.tenant_id

enabled_for_deployment=true

enabled_for_disk_encryption=false

enabled_for_template_deployment=true

sku_name=“standard”

provider = azurerm.subscription_dev

}

resource “null_resource” “kv-soft-delete” {

provisioner “local-exec” {

command = “az login –service-principal -u ${var.client_id} -p ${var.client_secret} –tenant ${var.tenant_id}“

}

provisioner “local-exec” {

command = “az resource update –id ${azurerm_key_vault.kvpoc.id} –set properties.enableSoftDelete=true”

}

}

Variables.tf – This file holds all the variables and their values.

variable “location” {

default=“East US”

}

variable “resourcegroupname” {

default=“poc”

}

variable “kvname” {

default= “aslsoftkvdemo”

}

variable “tenant_id” {

default=“[TENANT ID]”

}

variable “client_secret” {

default = “[CLIENT SECRET]”

}

variable “client_id” {

default=“[CLIENT ID]”

}

To run the above terraform script, run the below commands –

- terraform plan – It will tell what changes it will do on the infrastructure.

- terraform apply

– It will implement the infrastructure changes.

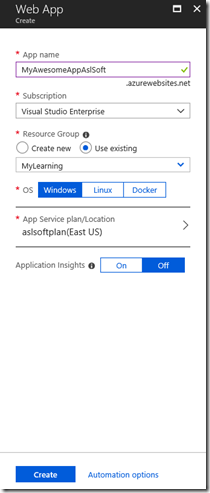

I have added below screenshot to show it has implemented soft delete option.

–End of the Article–